- Published on

Will ChatGPT be as significant as writing 4000 BC?

- Authors

- Name

- Vlada Rusina

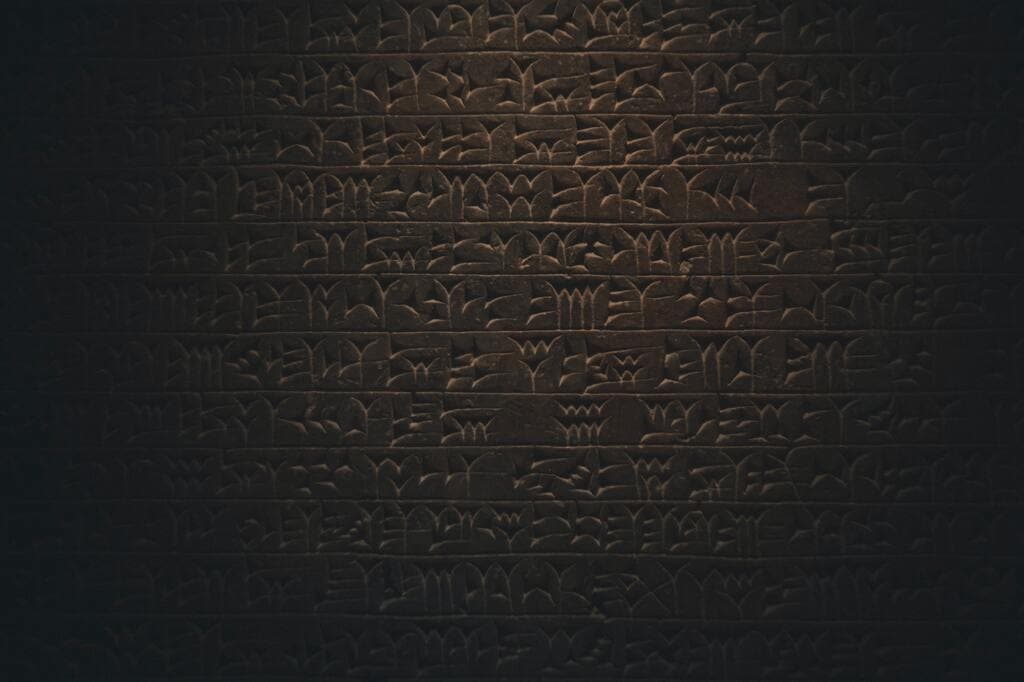

Photo by Egor Myznik. 1

Photo by Egor Myznik. 1The frenzy about revolutionary text models raises concerns about the future. First, ChatGPT 3 was trained with 175 billion parameters. Then Baidu announced the EARNIE bot that operates with 260 billion input parameters. Ultimately, ChatGPT 4 is predicted to have 10 trillions parameters and it might become not just a text-only model, but be multimodal. This amount is close to the situation when AI absorbs all the information existing on the internet, which includes texts, pictures, and videos. Hence we are about to run out of data that can be provided to deep learning models. Some researchers are bold enough to assume it can even approach the conception of artificial general intelligence (AGI). We can speculate a lot, but what’s obvious is that it is a game changer. For contemporary history, ChatGPT may have the same significance and bring the same opportunities as writing did for ancient civilizations.

4000 BC, when the first cities began to appear with their density of population up to tens of thousands of people, ancient leaders would come to a completely new challenge: how to rule all these people? How to let them know about important decisions and laws? How to keep citizens fed up and safe? How to memorize all the deals with neighbours? Ultimately, how to make people act like members of a united community? Spoken language was not enough to carry these vital messages.

In this way, the first evidence of writing is attributed to red tape – tracking money and storage of goods, counting the amount of crops harvested, trade deals with neighbouring cities, registration of loans and so on. These are exactly what archaeologists deciphered on Sumerian clay tablets. What’s important is that all this information is not just about day-to-day administration. Governments are based on writing. People created bureaucracy, laws, and ideology with the help of writing. As a result, it allowed leaders to unite atomized tribes, create wide networking, involve thousands of people into joint activities and thereby have benefits of synergy and progress.

Only much later people began to use writing as art and create fiction and poetry, or to carry complex and abstract ideas in philosophy and mathematics or to preserve information, all of which are essential as well.

If writing led to the emergence of early civilizations, then what will AI implemented in ChatGPT be capable of? Can it engage the resources of the whole planet into joint activities? Can it decisively wipe borders and step over ambitions of nations to meet global targets? Should we delegate it the right to replace workforce for the sake of meeting more challenging targets?

ChatGPT is becoming omnipresent. Reportedly, it can master a huge number of professions. Where people are limited with their physiology and emotions, AI is unbiased. Where we are atomized and burdened with cultural and religious differences, mindset, language, political views, economic competition, AI can be beyond these. It may become a universal tool, which will be able to engage all the resources of the globe into planetary-scale activities to achieve revolutionary aims for humans’ benefit such as exploring outer space, preventing global warming and other big issues. It doesn’t need to be mentioned that the world is fragmented, and it slows down progress and key challenges solving.

At this point, many people consider AI expansion rather dubious. Lack of trust in it has reasonable ground. It’s quite disturbing to have misgivings of mass layoffs and comprehension of being not the wisest in the Universe. ChatGPT by saving our time and efforts steals our ability to make research, train will, and develop creativity and ability to analyze data. It just feeds us with turnkey solutions and enslaves us. Probably, some of our predecessors were not very enthusiastic about expanding writing as well.

What we have already been experiencing is loads of AI generated content (AIGC), which inundated TikTok, Twitter and many other sources on the internet so that we can’t distinguish between AI-generated and human-made videos or texts. It undermines our confidence, makes us question the nature of reality, and incites fear of losing something vital. What sort of information do we consume? What hidden agenda is promoted in those funny videos or entertaining articles? It is only exacerbated with commercial temptation of big companies, non-state actors or governments’ attempts to promote their narratives. Obviously, it will take decades for regulators to catch up with new technologies and establish proper control over them. A Taiwanese computer scientist Kai-Fu Lee considers 50% of the outcome of AI to be negative, which is quite off-putting in this technology.

"In light of this information, should we delegate the power of writing to chat-bots and will real AI be a good version of Skynet? As the old proverb says, the pen is mightier than a sword".

Footnotes

Photo by Egor Myznik on Unsplash ↩